in our latest review we take a look at NVIDIA’s latest graphics circuit from a more humane perspective than we’ve one up till today. We simply offer you an image of GeForce 8800 GTX during normal circumstances.

New graphics cards are launched every new week, but then it usually third party manufacturers which release new cards based on the, at the moment, most popular circuits. With a few months apart we find the bigger launches when NVIDIA and ATI launches their new series of graphics cards and then there is a lot more interesting things to write about, obviously. NVIDIA G71 was nice update when the GeForce 7900 series was launched and the same applies to ATI’s equivalent R580 and the X1900 series. But to be hones there wasn’t much that separated the new graphics circuits from the old ones, sure there was a big boost in performance, but our taste have grown a bit too sophisticated to be completely satisfied by these launches. The occasions we’re really longing for are the ones when NVIDIA and ATI decide to start over and launch a whole new architecture, which is just what NVIDIA has done with the launch of the G80 architecture.

We have already published a short preview of NVIDIA’s new G80 architecture and primarily its new flagship GeForce 8800 GTX, but now that NVIDIA has launched a new architecture we weren’t happy with just offering you a preview. The next step of our coverage of the G80 architecture is the full scale review you’re reading now and in it we will dig deeper into how NVIDIA has designed its new architecture, how it’s different from current graphics card architectures and of course how GeForce 8800 GTX performs in comparison with the competition. First of all we will take a look at GeForce 8800 GTX and how the G80 architecture has been designed to work.

The graphics card we’ve longed for is finally here, GeForce 8800GTX has been in our test lab for some time now and been available in stores worldwide. When ATI or NVIDIA launch a new graphics card they usually claim it’s a new architecture. The truth is often closer to a refined or extended version of an already proven architecture. In this case it’s entirely true though, the graphics circuit housed on 8800GTX’s long black PCB is of a completely new design. We start off with a comparison of the specifications.

*nVidia reference frequencies, may vary between manufacturers.

** nVidia reference, depends on the frequencies.

When NVIDIA in 2002 dedicated a group for developing G80 they received several goals, whereof the two most important were significantly better performance and an improved image quality with new features. The question is how they would obtain this; continue down the same path or make a fresh start? Everything that exists will reach a point when some radical changes has to be made to the base architecture for the result to be possible to note. Perhaps not the most sensible sentence, but it gives us a good start for how we will explain the great leap NVIDIA has made with its new generation of graphics processors. There are examples of radical changes which has just made things worse. An example of that is when the dictator of China, Mau, decided that the red color of communism would mean ‘Go’ and that green would mean ‘Stop’. And as expected this resulted in hoards of traffic jams and many casualties as total chaos spread in the streets.

The point is that we all knew that G80 would be a radical change when compared to previous graphics processor architectures. Something which caused quite a confusion and were the base of many rumors which spread like a stampede over the Internet. The largest concern was that NVIDIA could’ve compromised the performance of existing DirectX 9-based applications to make room for DirectX 10. With the results in hand we can only conclude that this is not the case. G80 and GeForce 8800GTX displays fantastic performance mixed its image quality.

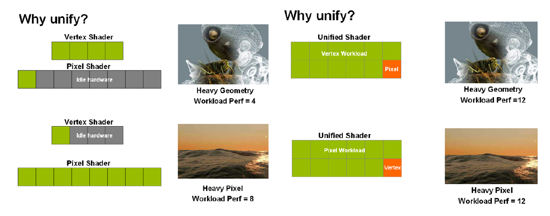

The fundamental change we’re trying to bring out is the ‘new’ term “Unified Shader”. Previous generations of graphics processor have had a given template with a certain sequence where pixel data was sent to the pixel shader and vertex data was sent to the vertex shader. Let’s imagine that a graphics card with only 10 shaders all in all. 6 for pixel instructions and 4 for vertex.

To simplify we will say that all real time rendering is a balance between pixel and vertex shading. A special environment and event in a game uses a different amount of pixel and vertex instructions. At one split second we may have a load of 90% pixel shading and the next the roles are reversed. In other words, we would’ve had a full pixel pipe while the vertex side had been practically empty. This means that the previous generations of graphics processors had a lot of unused capacity, nothing odd about that, it’s always been like that. With Unified shaders this is a thing of the past as all of G80’s 128 shaders can be dynamically allocated and perform both pixel and vertex instructions (also geometric instructions for DX10), which means that the efficiency will be considerably higher than before..

After this short presentation about the biggest difference between the past and presence we will take a closer look at the architecture on the next page, focusing on G80’s new memory interface.

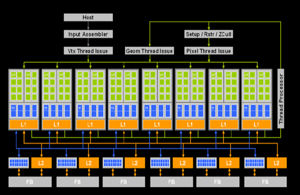

As we’ve mentioned GeForce 8800GTX implements 128 scalable streaming processors (shaders) which each work at 1350MHz. The fact that they are all completely independent of each other and scalable makes it possible to utilize the GPU’s resources to the fullest. Something which both ATI and NVIDIA have always wanted. ATI’s coming graphics circuit, better known asR600, will work in a similar way.

Complete block diagram of the G80 circuit

The green blocks all illustrate how all streaming processors are grouped and place in G80. The biggest difference between 8800’s streaming processors and 7900’s shaders is that they are clocked at considerably higher frequencies(650MHZ vs. 1350MHz) and can be placed closer to each other in large groups, more on the same surface. Each streaming processor produce a data segment which another streaming processor can receive and continue working with. The cache is used to store these data segments or data streams.

One of the big news with 8800’s streaming processors is that they are scalable instead of vector-based. Something which is completely natural as many instructions for graphics uses a vector form, e.g. RGBA if it’s the color of pixel. But a scalable instruction form is getting more and more common with new graphics applications so it’s without a doubt a clever move by NVIDIA to build like so from the start. Theoretically a scalable shader can offer up to twice the performance, according to NVIDIA, if the application is written with scalable shaders in mind. But also if we just punch in a few numbers and take an example of two instructions. We will let the first be represented by a multiplication of a pixel group with two variables (pixels*xy). The second will be an addition of another variable to the same pixel group (pixels+a). We will then apply these instructions on 16 pixels. To understand this you need to know that one of 7900s shaders works with four pixels per clock cycle and can execute two different instructions each cycle (e.g. multiplication and addition), while the 8800’s scalable shaders work with 16 pixels and can execute one instruction per clock cycle (do note this is a gross simplifications). 7900 thus needs 4 cycles while the 8800 only needs three 3 to finalize the operation. 8800 has an interpretation unit which interprets the instructions in vector format and splits the first instruction into two parts (pixel group*x and pixel group*y). This results in 25% better performance using the same resources.

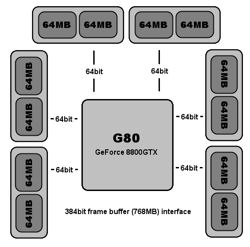

For the raw power of 8800 to really come to use you need a powerful interface for storing finished pixels and textures. To meet this demand NVIDA has increased the number of ROPs from 16 to 24, and the number of memory channels to six with 128MB (768MB total) in each channel. Each channel has an individual interface at 64bit which results in a total of 384 bit. It’s the same principle for graphics cards as for regular RAM in the system. Single channel DDR2 has a bus at 64bit and dual channel has a bus at 2×64=128bit.

An illustration of how the memory interface is designed

We will continue by taking a look at the image quality features of GeForce 8800 GTX.

With GeForce 8800 NVIDIA introduces a new concept for its commitment to better image quality and picture improvements. This concept introduces four new types of antialiasing for single-GPU; 8x, 8xQ, 16x, and 16xQ. The difference between the regular, 8xQ and 16xQ is that the latter two offers extra high quality. New to NVIDIA is that 8800GTX also supports HDR and antialiasing at the same time(both FP16 and FP32). Earlier this has only worked with ATI’s Radeon 1k series. Worth mentioning is that games such as Oblivion doesn’t have a native support for HDR+AA. Therefore you have to inactivate AA in the games settings and instead activate AA through NVDIA’s control panel, the same goes for ATI. This has nothing to do with NVDIA’s nor ATI’s software or hardware but that the game hasn’t been designed to run HDR when AA is activated.

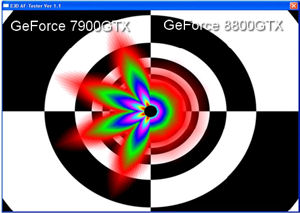

Anisotropic Filtering is an image quality function which increase the depth and clarity of textures. Also here we find new functions to NVIDIA and thus GeForce 8800GTX and that is that Anisotropic Filtering also works with textures at an angle. ATI’s 1k series already supports this. Anyhow, "Angular AF” improve the total effect of Anisotropic Filtering. In the tests we performed with GeForce 7900GTX, Radeon X1950XTX and GeForce 8800GTX with games such as Oblivion and Half Life 2 GeForce 8800GTX displays a marginally better result than Radeon X1950XTX and significantly better than GeForce 7900GTX. To illustrate the difference we’ve arranged two synthetic examples using D3D AF tests 1.1.

Comparison between GeForce 7 and GeForce 8

Comparison between Radeon X1950XTX and GeForce 8

Anisotropic Filtering is very trying for the memory. GeForce 8800GTX’s new and efficient memory interface with a total buffer at 768MB really does its work, since you hardly notice that you’re using Anisotropic Filtering, instead of the regular Isotropic Filtering, until you move up to16x. It’s pretty clear, GeForce 8800GTX doesn’t just offer raw performance but also an astonishing image quality.

We continue with a look at the test system before we head on to the benchmarks.

| |

Test system | |

| Hardware | |

| Motherboard | Intel Bad Axe 2 |

| Processor | Intel Core 2 Extreme X6800 |

| Memory | Corsair PC6400C3 2x1024MB |

| Harddrive | WD Raptor 36GB 10 000 RPM |

| Monitor | Dell UltraSharp 2405FPW |

| Graphics card | XFX GeForce 8800GTX 768MB ATI Radeon X1950XTX 512MB |

| Power supply | Cooltek 600W |

| Software | |

| Operating system | Windows XP (SP2) |

| Drivers | Catalyst 6.10 ForceWare 96.89 |

| Test program |

3DMark 01SE |

|

|

| Settings: | Value: |

| Soft Shadows | Off |

F.E.A.R is still one of the games that carries the highest requirements for graphics cards. The system is only a small bottleneck here, which makes F.E.A.R one of the most suitable game tests for graphics cards. Therefore we can clearly see how 8800GTX crushes the competition.

Next up is Quake 4.

Quake 4 is using the Doom 3 engine, which always have favored NVIDIA’s graphics cards. If GeForce 8800GTX was crushing in the last test it leaves the opposition completely devastated here. GeForce 8800GTX leaves the opposition far, far behind. At 1920×1200 we can see a twice as high fps with 8800GTX as with 7900GTX.

Next up is Tomb Raider: Legend.

| Setting: | Value: |

| Next Gen | On |

The newest member of our game tests is the newest addition to the game series starring Lara Croft, Tom Raider: Legend. This is a game with high requirements containing several pure fresh rendering technologies. Here the entire system including the graphics card plays a part. With Next Gen and HDR activated it is one of the heaviest games of today. 8800GTX displays its great strength, even when we use image quality improving technologies.

We continue with Half Life 2: Lost Coast.

| Setting: | Value: |

| Next Gen | On |

Half Life 2 with its source engine has displayed some great flexibility when it comes to reconstructions and implementing new technologies. The fact is that the source engine supports HDR+AA no matter what DX9 graphics card you use. Thus, you can run HDR+AA even with Nvidia 7900GTX in Half Life 2. 8800GTX brings home the performance title with a surprisingly small margin.

The next game is Oblivion.

Oblivion is the latest game of the Elder Scrolls series and offers a lot of impressive graphics of a role-playing character with a lot of outside environments .GeForce 8800GTX with its enormous performance really gets to show what it can do here, we can see a twice as high fps when compared to the opposition.

NVIDIA has developed a whole new graphics circuit architecture with G80, something many doubted would even come just a year back. Everyone was talking about ATI and that it would be the first to launch a unified shader architecture, especially as it had developed a similar circuit for Microsoft’s video game console Xbox 360. At the same time, the first reports about G80 said that NVIDIA didn’t feel that the market was ready for a unified shader architecture. As so many other rumors about G80 this turned out to be false and NVIDIA has now delivered a whole new and very exciting graphics circuit architecture, which will not only be interesting in Windows Vista and with DirectX 10 but has been able to show what it can do today already. Many were afraid that G80 would have a hard time keeping up with a unified architecture before DirectX 10 was launched and with Vista, but we can gladly tell you that this is not the case.

Already a few weeks ago we had a lot of positive things to say about NVIDIA and its GeForce 8800 GTX graphics card, which we then only had had in our possession for a days. In our preview where we instead focused on overclocking the card we could see what a huge performance potential the G80 architecture holds, and GeForce 8800 GTX especially. Before we wanted to offer our final judgment for NVIDIA’s latest flagship we wanted to give the card a real challenge in the environment we believe most GeForce 8800 GTX graphics card will be working in. In a high-end gaming computer where one is looking for optimal performance and image quality.

Today, there is neither Windows Vista nor DirectX 10 and we don’t even have to mention the shortage of new DirectX 10 games. We therefore have no other choice than to test NVIDIA’s new flagship with today’s games which are based on DirectX 9 and Shader Model 3.0. THose that have been doubting that a unified shader architecture would be able to handle the games of today we can only direct you to our benchmarks and ask you to study the results. GeForce 8800 GTX completely crushes the opposition with today’s games as well and the more graphical effects we add the bigger the difference gets. ATI’s Radeon X1950 XTX which up until now had been the fastest single-GPU graphics card on the market doesn’t stand a chance and the same goes for NVIDIA’s GeForce 7950GX2 with dual G71 cores. It’s simply a whole new generation of graphics circuit we can see here and this can easily be observed by studying the performance.

NVIDIA has done its homework when it comes to the image quality and as our tests have shown NVIDIA has updated the image quality technologies but also delivers completely new antialiasing for single-core graphics cards for those who wants to reach that close to unimaginable image quality. As we mentioned in our preview GeForce 8800 GTX is a mighty card with a just as mighty and heavy cooler. But it’s needed as the close to 700 million transistors will dissipate a lot of heat. NVIDIA has managed to cool its card really efficiently and it’s actually quite quiet and the heat and the power consumption are really the only two noticeable drawbacks of GeForce 8800 GTX. The last piece of the puzzle is of course the price, which can be a bit much to some, you have to pay up to $650 to get a card of your own. But you should realize that you then get a graphics card that delivers the more performance than two GeForce 7900 GTX in SLI, which will cost you even more. GeForce 8800 GTX is simply the best graphics card on the market and the only question you need to ask yourself is if you really need all of this power. If the answer is yes than money shouldn’t be an issue considering what other components you need to feed a GeForce 8800 GTX. XFX GeForce 8800 GTX is without a doubt worthy of our Editor’s choice award and we’re looking forward to more cards based on NVIDIA’s G80 architecture.

XFX 8800 GTX