Revolutionizing Digital Asset Management: The power of Semantic and Multimodal search

Today's data-driven world, efficient digital asset management has become a critical factor in organizational success. As businesses accumulate vast repositories of digital content, the challenge of effectively searching, retrieving, and utilizing these assets has grown exponentially. Enter semantic and multimodal search technologies – powerful tools that are transforming how we interact with and extract value from our digital assets.

The Evolution of Search in Digital Asset Management

Traditional search methods, relying primarily on keywords and metadata, have long been the standard in digital asset management. However, these approaches often fall short when dealing with complex, diverse, and voluminous data sets. Let's explore how semantic and multimodal search are addressing these limitations and opening up new possibilities for asset discovery and utilization.

Understanding Semantic Search

Semantic search goes beyond simple keyword matching to understand the intent and contextual meaning of a search query. It leverages natural language processing (NLP) and machine learning techniques to interpret the relationships between words, concepts, and data.

Key benefits of semantic search in digital asset management include:

- Improved relevance: By understanding context, semantic search delivers more accurate results.

- Natural language queries: Users can search using conversational language rather than specific keywords.

- Concept-based retrieval: Assets can be found based on related concepts, even if exact keywords are not present.

- Disambiguation: The system can differentiate between multiple meanings of the same word based on context.

The Rise of Multimodal Search

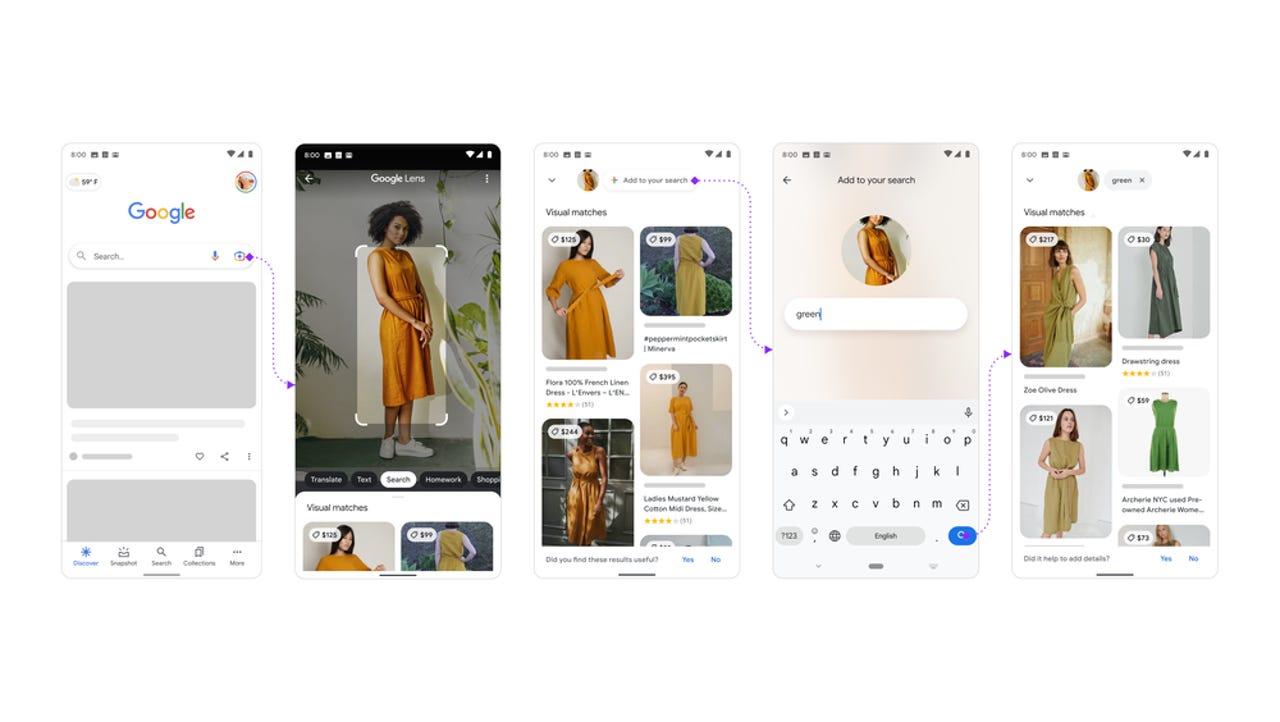

Multimodal search takes things a step further by integrating multiple types of data and search modalities. This approach combines text, images, audio, and even video to provide a more comprehensive and intuitive search experience.

Advantages of multimodal search for digital asset management:

- Cross-format discovery: Find assets across different file types and formats using a single query.

- Visual similarity search: Locate visually similar images or videos without relying on text descriptions.

- Audio transcription and search: Convert speech to text for searchable audio and video content.

- Gesture and sketch-based queries: Use drawings or gestures to search for visual assets.

Implementing Semantic and Multimodal Search

For developers and organizations looking to implement these advanced search capabilities, several key technologies and approaches come into play:

1. Vector Embeddings

At the heart of both semantic and multimodal search lies the concept of vector embeddings. These are dense numerical representations of data that capture semantic meaning and relationships.

Key points:

- Text embeddings: Models like Word2Vec, GloVe, or BERT can be used to create vector representations of text.

- Image embeddings: Convolutional Neural Networks (CNNs) like ResNet or VGG can generate visual feature vectors.

- Cross-modal embeddings: Techniques like Visual-Semantic Embeddings (VSE) allow for unified representations across different modalities.

2. Similarity Search Algorithms

Once data is represented as vectors, efficient similarity search algorithms are crucial for fast retrieval:

- Approximate Nearest Neighbor (ANN) algorithms: Methods like HNSW (Hierarchical Navigable Small World) or IVF (Inverted File) enable scalable similarity search.

- Locality-Sensitive Hashing (LSH): A technique for performing approximate similarity search in high-dimensional spaces.

3. Knowledge Graphs

Knowledge graphs provide a structured representation of entities and their relationships, enhancing semantic understanding:

- Ontology development: Define domain-specific concepts and relationships.

- Entity linking: Connect mentions in text to knowledge graph entities.

- Reasoning engines: Infer new relationships and enhance query understanding.

4. Multimodal Fusion Techniques

For truly integrated multimodal search, fusion techniques are essential:

- Early fusion: Combine features from different modalities before processing.

- Late fusion: Process each modality separately and combine results.

- Hybrid fusion: Use a combination of early and late fusion strategies.

Real-World Applications and Case Studies

Let's explore some concrete examples of how semantic and multimodal search are being applied in various industries:

1. Media and Entertainment

A major streaming platform implemented multimodal search to improve content discovery:

- Visual similarity search for recommending visually appealing thumbnails

- Audio-based search for finding specific moments in videos

- Semantic search for understanding complex plot descriptions

Results:

- 28% increase in user engagement

- 15% reduction in content abandonment rates

2. E-commerce

A large online marketplace integrated semantic and visual search capabilities:

- Image-based product search allowing users to find items by uploading photos

- Semantic understanding of product descriptions and user reviews

Outcomes:

- 35% improvement in search relevance

- 22% increase in conversion rates for visually-driven categories

3. Healthcare

A medical imaging company implemented multimodal search for their vast database of medical images and reports:

- Semantic search across medical terminologies and natural language reports

- Visual similarity search for identifying similar medical conditions

Impact:

- 40% reduction in time spent searching for relevant case studies

- 30% increase in the discovery of rare or similar cases

Challenges and Considerations

While semantic and multimodal search offer tremendous benefits, there are several challenges to consider:

- Computational complexity: These advanced search techniques often require significant computational resources.

- Data quality and preprocessing: The effectiveness of search depends heavily on the quality and consistency of the underlying data.

- Privacy and security: Handling sensitive data, especially in multimodal scenarios, requires robust security measures.

- Scalability: Ensuring performance as data volumes grow can be challenging.

- Interpretability: Understanding why certain results are returned can be more complex than with traditional search methods.

Future Trends and Innovations

As we look to the future of semantic and multimodal search in digital asset management, several exciting trends are emerging:

- Zero-shot learning: Ability to search for concepts not explicitly trained on.

- Multimodal transformers: Models like CLIP (Contrastive Language-Image Pre-training) enabling powerful cross-modal understanding.

- Federated search: Searching across multiple, distributed data sources while maintaining data privacy.

- Quantum computing: Potential for dramatically faster similarity search in high-dimensional spaces.

- Augmented reality integration: Combining real-world visual input with digital asset search.

Conclusion

Semantic and multimodal search technologies are revolutionizing digital asset management, offering unprecedented capabilities for discovering, utilizing, and extracting value from diverse data sets. As these technologies continue to evolve, they promise to unlock new levels of productivity and innovation across industries.

For developers and organizations looking to stay ahead in the digital asset management space, embracing these advanced search paradigms is becoming increasingly crucial. Tools like Similarix, developed by Simeon Emanuilov of UnfoldAI, are paving the way by adding intelligent AI layers to storage systems, enabling semantic search capabilities for S3 buckets and beyond.

As we move forward, the ability to effortlessly navigate and extract insights from our ever-growing digital universes will become a key differentiator. By investing in semantic and multimodal search technologies today, businesses can position themselves at the forefront of the next generation of digital asset management.

The future of search is here, and it speaks the language of context, meaning, and multi-dimensional understanding. Are you ready to listen?